News & Views: Open Access Books – Part I

This month we look at the growth of Open Access Books. We look at some key statistics from the Directory of Open Access Books (DOAB) and examine the rapid growth in OA Books.

Background

The Directory of Open Access Books (DOAB) was originally developed in 2012. It is one of three platforms run by the Netherlands-based OAPEN Foundation and France’s OpenEdition. OAPEN provides infrastructure for OA books and promotes their awareness and discovery. It was originally an EU-funded project and became a foundation in 2010.

OAPEN’s other two platforms include the OAPEN Library OA book repository, and the OAPEN Open Access Books Toolkit for authors. The DOAB covers a superset of books in the OAPEN Library, so we use its data for the most comprehensive coverage. The DOAB focuses on academic books, which must be made available under an open access license and be subjected to independent and external peer review prior to publication.

Growth of the DOAB

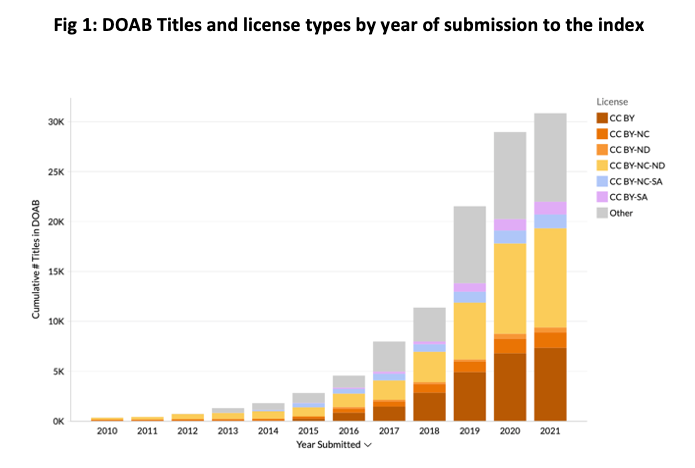

We first analyzed the total number of titles in the DOAB and their licenses, as shown in Figure 1, below. Figures for 2021 are YTD to end of June 2021; all other numbers are full year.

Source: DOAB, Delta Think Analysis.

We can see that the DOAB now indexes over 30,000 titles.

- The charts show the cumulative number of titles growing over time.

- License proportions are largely consistent over time.

- Just over 71% of titles use CC licenses.

- CC BY-NC-ND licenses are the most common (32% of the index).

- CC BY licenses are the second-most common (24% of the index).

- The numbers above do not include the circa 5,000 titles with submission dates unspecified in the publicly available data. (At the time of writing – July 2021 – the team at the DOAB are working on a fix.) We have excluded these titles from the chart above. Subscribers to our OA Data and Analytics Tool will be able to see the updated figures when they are released by the DOAB.

- Submissions for 2010 and 2011 were imported from an OAPEN service set up in 2010.

Make-up of the DOAB

The overall proportions of licenses in use by the index remain fairly constant over time. However, within the averages we see some interesting things depending on language and publisher.

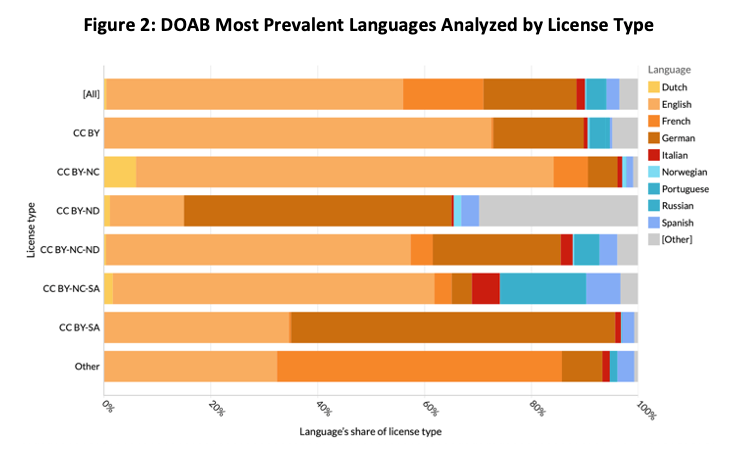

The figure above shows how the different languages making up the index relate to license types. Each horizontal bar represents a license. The colors show how the titles under each license are split between languages.

- Overall (the top bar), English is the most common language covering 55.5% of the index with German second (17.4%) and French third (15%). The remaining 12.1% of titles are split between around 40 languages in total. The most prevalent licenses are shown above; the rest form a long tail.

- English accounts for 72.6% of CC BY and 78.2% of CC BY-NC licensed books.

- Compare this with German, which accounts for 60.7% of CC BY-SA and 50.1% of CC BY-ND.

- French covers the largest share (53.3%) of non-CC or unspecified licenses.

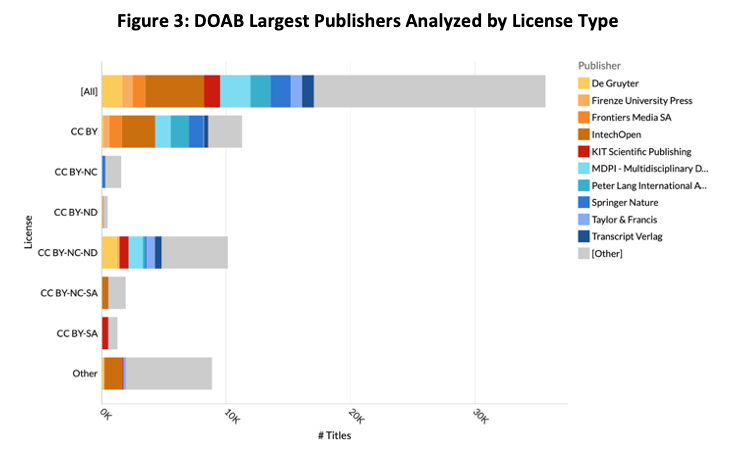

The figure above looks at how the largest publishers contribute to the index, and their preferred licenses. Each horizontal bar represents a license. The colors show how the titles under each license are split between publishers. The length of the bars show the total # titles in the index, so you can see the relative weight of each.

- The 10 largest publishers together account 47.9% of the index (top bar). Another 460 or so publishers make up the remaining 52.1% of the titles.

- The largest publishers are now IntechOpen (13.2% of titles), MDPI (6.8%), then de Gruyter, Peter Lang and Springer Nature (ranging from 4.7% to 4.5%).

Most publishers favor CC BY or CC BY-NC-ND licenses. CC BY is the most common for the majority. However, note that MDPI publishes more under NC-ND than BY.The underlying data (not shown here) reveal historical patterns in publishers’ growth. 2019’s figures were boosted by IntechOpen, KIT Scientific, and Peter Lang International adding significant numbers of titles. Before 2015, the current top 10 publishers accounted for only around 10% of the index.

Conclusion

The DOAB has seen explosive growth over the last few years. Over the 3 years to 2020, its CAGR was 53%, compared with 14% for OA journal articles. (5-year CAGRs to 2020 are 60% for the DOAB and 15% for journals).

Although some of this is likely a result of the infrastructure becoming more widely adopted, it’s clear that OA books are gaining traction. Growth is driven by larger organizations coming on board, plus a growing long tail of publishers joining OAPEN. The likes of Springer Nature, De Gruyter, KIT, and T&F have been longstanding contributors to the index.

The explosive growth of books should also be put in the context of “high growth from a low starting point”. Absent a definitive index of academic books, we sampled data from a few publishers. The results suggest that barely 1% of their output is in the DOAB on average. So, as with journal article output, we may see growth rates start to fall towards a steady state after the initial cohort of titles is made open.

Comparing patterns in books with those in journals shows that there is a similar level of consolidation in the market. The 10 largest publishers account for around 50% of both books and journal output. License usage, however, appears to be different for books: CC BY-NC-ND appears to be far more prevalent in books compared with journals. Books are different beasts to journals, so it’s likely that authors and publishers want greater restrictions intended to “protect” long form scholarship, which is so central to tenure and promotion in Humanities and Social Sciences and also to afford greater commercial opportunities around print and other formats.

With so many unknowns in the current data set we will need to wait for updates to complete a full analysis. We will run further analysis as more data becomes available to us.

This article is © 2021 Delta Think, Inc. It is published under a Creative Commons Attribution-NonCommercial 4.0 International License. Please do get in touch if you want to use it in other contexts – we’re usually pretty accommodating.