It’s not uncommon for us to hear a society or association voice strong reservations about starting an open access (OA) journal based on a fundamental belief that open access is synonymous with low quality. “A fully open access journal will dilute our brand” is one refrain; “We don’t want to start a ‘journal of rejects’” is another.

This month we decided to dig into the data to see if this belief is well founded: On average, are fully OA journals lower, equal, or superior quality to their subscription siblings?

The Usual Disclaimers About Quality

For better or for worse, citation-based metrics are widely used as a proxy measure of journal ‘quality’. The discussions about the merits and difficulties of this practice have been well-rehearsed elsewhere, so we will not cover them here. Whatever changes might happen in future, and for all the faults of the current methods, Impact Factor is currently used as the de facto benchmark. So, when discussing perceptions of journal quality in the current market, it seems reasonable to analyze patterns in such citation-based metrics.

Citation Metrics for the Highest-Ranking Journals

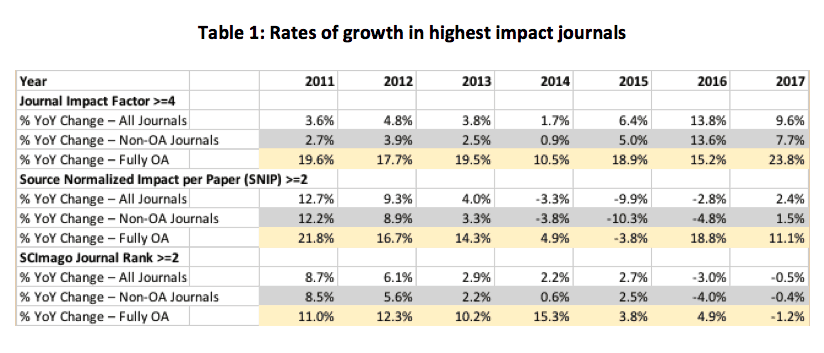

First, we looked at the highest-impact journals as measured by a number of citation-based metrics for the past several years. We compared the Journal Impact Factor (JIF, from Clarivate Analytics’ Journal Citation Report), CWTS’ Source Normalized Impact per Paper (SNIP), and Scimago Journal Rank(SJR), the latter two being based on Elsevier’s Scopus.

Table 1, below, shows the initial results. Subscription and hybrid journals were counted as ‘Non-OA’, fully open access journals were counted as ‘Fully OA’ and no corrections have been applied to the data to compensate for lead times in data gathering. Remember, too, that as we are counting numbers of journals, the effects of the big Megajournals are automatically controlled out. They form just a few of the thousands of journals in our study.

Source: Delta Think analysis, JCR, CWTS and SCImago, (n.d.). SJR — SCImago Journal & Country Rank [Portal]. Retrieved 5th July 2018, from http://www.scimagojr.com

For all three metrics, the percentage increase of ‘high quality’ journals year-on-year is growing faster for fully OA journals than for non-OA journals and the indices as a whole. In other words, fully OA journals appear to be accounting for an increasing share of the top performers. (The notable exception is for SJR from 2016-17, although the top fully OA journals had a growth spurt in the previous year, so the trend holds.)

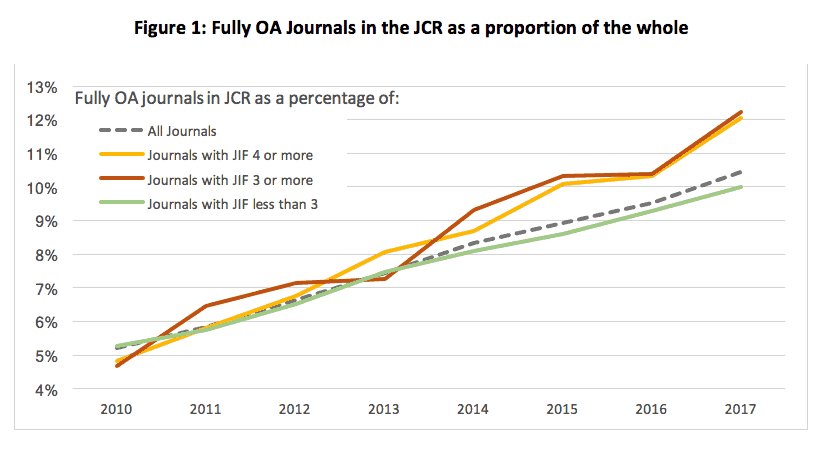

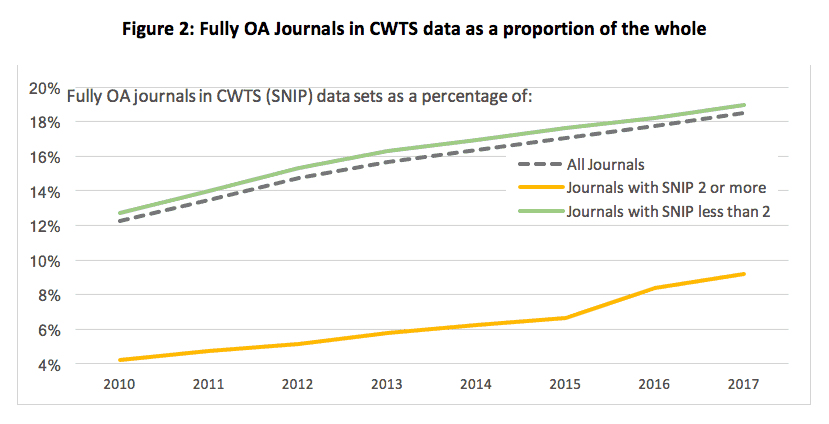

It seemed worth digging further into the data. The tables only show the annual changes in journal numbers for the highest-performing segment. We also need to control for the change in fully OA journal numbers in general, and this is shown in Figures 1 and 2 below.

The charts below show the number of fully OA journals as a percentage of all journals as grey dotted lines. The other colors show what happens if we focus on just journals with the stated measures of impact. For example, the yellow line in Figure 1 shows the number of fully OA journals with a JIF of 4 or more as a percentage of all journals with a JIF of 4 or more. So, by comparing with our dotted grey ‘control’ line we can see if journals of particular levels of impact account for proportionately more or less of their segments than the average.

Source: Delta Think analysis, JCR, CWTS and SCImago, (n.d.). SJR — SCImago Journal & Country Rank [Portal]. Retrieved 5th July 2018, from http://www.scimagojr.com

Source: Delta Think analysis, JCR, CWTS and SCImago, (n.d.). SJR — SCImago Journal & Country Rank [Portal]. Retrieved 5th July 2018, from http://www.scimagojr.com

The data suggest that, while the proportion of fully OA journals is growing over time, the proportion of higher-performing fully OA journals is growing faster than the average performers. In other words, the high-performing fully OA journals are taking share.

We can also see the differences between the indexing methods. The JCR contains a lower proportion of fully OA journals than CWTS/SCImago (10.5 % vs. 19% in 2017, respectively1). This makes the growth of the highest performing fully OA journals in the JCR even more marked: they now account for a disproportionately large share of the top performers. Conversely, the highest performing fully OA journals occupy a disproportionately lower proportion of the CWTS/SCImago (i.e. Scopus-based) index than the OA average performers, but they are showing signs of catching up.

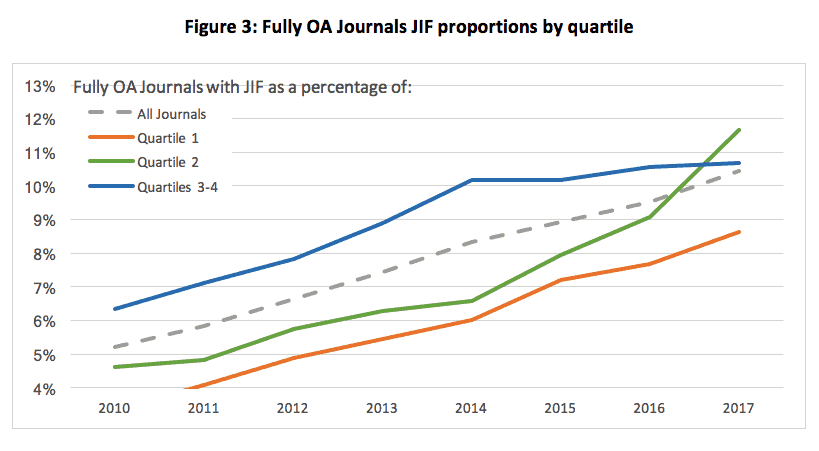

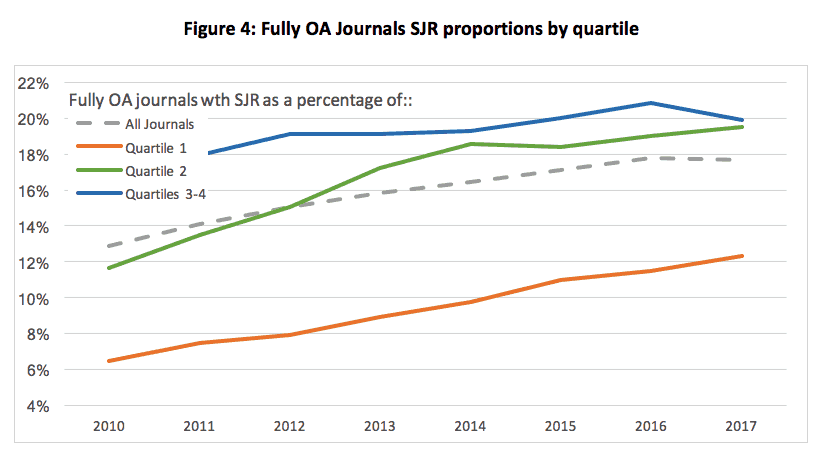

Finally, we can analyze performance by quartiles to tease out some further nuances in the data. The quartile data supplied by the indices also controls out the variations in JIFs between different subject areas, so we can get a like-for-like comparison.2 Figures 3 and 4 show the results.

Source: Delta Think analysis, JCR, CWTS and SCImago, (n.d.). SJR — SCImago Journal & Country Rank [Portal]. Retrieved 5th July 2018, from http://www.scimagojr.com

Source: Delta Think analysis, JCR, CWTS and SCImago, (n.d.). SJR — SCImago Journal & Country Rank [Portal]. Retrieved 5th July 2018, from http://www.scimagojr.com

In both cases, the lower than average fully OA journals are decreasing as a proportion of fully OA output and when compared with the index as a whole. In other words, fully OA journals in general are increasingly likely to be above average, although they have yet to dominate at the very highest levels.

We know of course, that JIFs vary by field and many consider them to be flawed metrics, so we have tried to be fair in our comparison. For example, China famously rewards publication in journals with JIFs of 5 or greater, so we tested these and saw the same patterns as we showed here for JIFs above 3. We also used the multiple data sets (JCR, CWTS and SCImago) to show how the nuances in performance vary among broader samples.

Conclusions

What does this all mean? The data show that an increasing number of fully OA publications are attaining higher impact factors at faster rates than their subscription and hybrid counterparts.

Determining the causes of this observation is difficult. Many studies claim the existence of an Open Access Citation Advantage, which would increase citation-based metrics and therefore ‘quality’ simply by virtue of increased traffic. (Although, there are arguments against this and even arguments for a possible OA citation disadvantage.) Alternatively, we could be seeing evidence of fully OA journals maturing, with their general quality ‘catching up’ to the average and therefore attracting more citations.

But one thing is clear: there is nothing preventing an OA journal from being ‘high quality’, and based on this data, a fully OA journal’s Impact Factor now appears more likely to be above average for its field.

As is the case with any publication, a journal’s quality is dependent on the mission and objectives of its owner and how those objectives are executed and communicated. OA journals do not dilute journal brands if they maintain the same brand promise as the flagship publication. The data seem to indicate that an increasing number of fully OA journal owners and editorial teams are choosing the ‘higher quality’ path for their publications.

1 Methodological note: we take the SNIP from CWTS data and the fully OA status from SCImago data.

2 Our choices of JIF, SNIP and SJR threshold above in Figures 1 and 2 fall towards the upper end of the second quartile.