How Diverse Are Your Publications?

What Problem Were We Trying to Solve for our Client?

Diversity, equity, and inclusion (DEI) have become priorities for organizations as they strive increasingly to be sensitive to the needs of their community and to provide solutions on topics of primary importance to their constituents. Any organization that wishes to be successful in today’s market needs to consider DEI as an important component of their current and future strategy.

But how does an organization go about building a baseline or foundation from which to set goals and improve the DEI of their portfolio?

To understand where your organization should be in your DEI makeup and efforts, you must start by benchmarking current state. One of Delta Think’s scholarly publisher clients recently decided to do just that – pursue a project with us to understand and benchmark the underlying demographic makeup of their authors and other contributors to their portfolio as a starting point to implement processes and procedures that ensure they are always working toward a diverse, equitable, and inclusive community.

What were the Goals & Objectives?

The project goal was to develop a foundational understanding of the demographic makeup of the organization’s portfolio and offer a path forward to set organizational goals to maintain DEI efforts for their publications into the future.

Delta Think and the client set out to collect and analyze demographic data for all its publications to achieve the following more detailed goals:

- Based on author information in its editorial workflow systems, identify gender, race/ethnicity, and geographic information for authors, reviewers, editors, and editorial advisory board members for each partner journal, over the last three years.

- Provide a comprehensive data analysis report for each publication, including an overview of gender, race/ethnicity, and geographic findings for each publication/editorial team. Benchmark these findings against broader averages for STEM demographic data as a baseline for comparison purposes.

- Develop recommendations for setting goals and implementing best practices around collecting/storing the demographic data going forward as well as organizational and/or public reporting/sharing of data.

The key here was to establish data that would allow the client to understand their baseline DEI makeup and build goals going forward.

What Methodology was Deployed?

Collecting opt-in data from the community is the most accurate solution. However, efforts to do so have proved slow, time-consuming, and not statistically valid in early days. This is the method of choice going forward, but a quick solution for establishing a baseline has great value, as organizations are ramping up to collect and store the opt-in data and accumulate a large enough sample set to be accurate and useful. In the interim, a method that allows organizations to establish a baseline using an automated data analysis system can provide the foundation needed to set starting goals and measure success.

We first worked with the client to generate activity lists for all their publications - lists of authors, reviewers, board members, and editors were gathered, consolidated, normalized, cleaned, and readied for analysis. For this client, we started with 500,000 ‘person/activity’ records, which reduced down to 200,000 unique records after cleaning/deduping.

After some research, the commercial specialist software Namsor was chosen to estimate likely gender and ethnicity based on patterns in the names. Namsor was established more than 10 years ago, cited in 162 papers since 2016, and used by one of the largest international scholarly publishers for their biannual Gender reports. Namsor also utilizes an international outlook and bases its classification algorithms (rules) on global data sets; most alternative suppliers systems are based on US name data, or much narrower samples.

The software was used to identify likely gender and ethnicity to be applied across the journals and portfolio, and so produce data about the program by analyzing name and country and returning categories and probabilities of a good match based on a number of factors. Manually and self-declared information was included and allowed the team to assess automated results and calculate accuracy.

An anonymized results set was produced to allow safe reporting. Data was never assigned back to the individuals studied; it was only used in the aggregate to show baselines and trends.

Finally, it was crucial to manage expectations and understand the limits of the automated methods used. The software only estimates a likely categorization of metadata, which can be used as a proxy to form a view of contributors. It is not trying to classify people. The final method was arrived at in consultation with our client, to consider issues such as non-binary gender identification and appropriate classifications of ethnicity.

What did we find out and how will it be used?

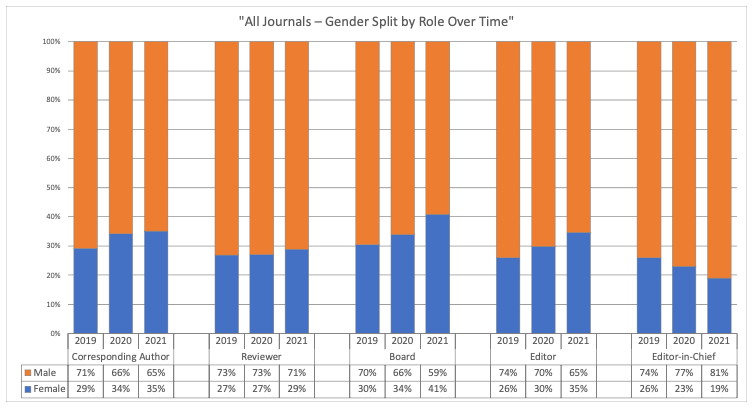

Through this process, the client achieved their goals. Delta Think was able to identify the DEI makeup of their portfolio, compare it to current industry trends, and, most importantly, provide the client with intelligence they could use to establish goals for improvement going forward. The sample below is an example of the type of data and analysis this project yielded for the client. We also provided multiple visualizations to compare individual journals and collections of journals with the portfolio-wide averages. These can all be used to identify strengths and weaknesses and develop strategies to do more of what is working, less of what is not, and use this information to track and trend the impact of change.

How can Delta Think help you?

In order to set targets and strategies for improving the Diversity, Equity, and Inclusiveness of your portfolio, you must have a starting point upon which to base your goals and track impact of change. Delta Think can help you establish that starting point and set goals based on industry best practices when developing your longer term solution around self-reported/opt-in data and secure tracking.

Contact us today to discuss a DEI project customized to satisfy your specific organizational challenges, goals, and budget.